The following example implements a classic sliding window of data. In many implementations, data is added to a warehouse over time and the oldest data is aged out. Many times, this data is range partitioned by a date attribute, so that the oldest data is stored together in a single partition, and the newly loaded data is likewise stored together in a new partition. The monthly load process involves the following:

•\ Detaching the old data: The oldest partition is either dropped or exchanged with an empty table (turning the oldest partition into a table) to permit archiving of the old data.

•\ Loading and indexing of the new data: The new data is loaded into a work table and indexed and validated.

•\ Attaching the new data: Once the new data is loaded and processed, the table it is in is exchanged with an empty partition in the partitioned table, turning this newly loaded data in a table into a partition of the larger partitioned table.

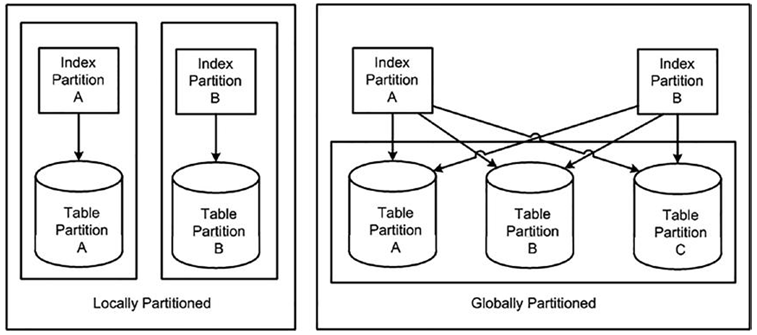

This process is repeated every month, or however often the load process is performed; it could be every day or every week. We will implement this very typical process in this section to show the impact of global partitioned indexes and demonstrate the options we have during partition operations to increase availability, allowing us to implement a sliding window of data and maintain continuous availability of data.

We’ll process yearly data in this example and have fiscal years 2020 and 2021 loaded up. The table will be partitioned by the TIMESTAMP column, and it will have two indexes created on it—one is a locally partitioned index on the ID column, and the other is a global index (nonpartitioned, in this case) on the TIMESTAMP column:

$ sqlplus eoda/foo@PDB1

SQL> drop table partitioned;

SQL> CREATE TABLE partitioned( timestamp date,id int)

PARTITION BY RANGE (timestamp)

(PARTITION fy_2020 VALUES LESS THAN

( to_date(’01-jan-2021′,’dd-mon-yyyy’) ) , PARTITION fy_2021 VALUES LESS THAN

( to_date(’01-jan-2022′,’dd-mon-yyyy’) ) ); Table created.

SQL> insert into partitioned partition(fy_2020) select to_date(’31-dec-2020′,’dd-mon-yyyy’)-mod(rownum,360), rownum from dual connect by level <= 70000;70000 rows created. SQL> insert into partitioned partition(fy_2021) select to_date(’31-dec-2021′,’dd-mon-yyyy’)-mod(rownum,360), rownum from dual connect by level <= 70000;70000 rows created. SQL> create index partitioned_idx_local on partitioned(id) LOCAL; Index created.

SQL> create index partitioned_idx_global on partitioned(timestamp) GLOBAL; Index created.

This sets up our warehouse table. The data is partitioned by fiscal year, and we have the last two years’ worth of data online. This table has two indexes: one is LOCAL and the other is GLOBAL. Now it’s the end of the year and we would like to do the following:

\ 1.\ Remove the oldest fiscal year data: We do not want to lose this data forever; we just want to age it out and archive it.

\ 2.\ Add the newest fiscal year data: It will take a while to load it, transform it, index it, and so on. We would like to do this work without impacting the availability of the current data, if at all possible.

The first step is to set up an empty table for fiscal year 2020 that looks just like the partitioned table. We’ll use this table to exchange with the FY_2020 partition in the partitioned table, turning that partition into a table and in turn emptying out the partition in the partitioned table. The net effect is that the oldest data in the partitioned table will have been in effect removed after the exchange:

SQL> create table fy_2020 ( timestamp date, id int ); Table created.

SQL> create index fy_2020_idx on fy_2020(id); Index created.

We’ll do the same to the new data to be loaded. We’ll create and load a table that structurally looks like the existing partitioned table (but that is not itself partitioned):

SQL> create table fy_2022 ( timestamp date, id int ); Table created.

SQL> insert into fy_2022 select to_date(’31-dec-2022′,’dd-mon-yyyy’)-mod(rownum,360), rownum from dual connect by level <= 70000;70000 rows created. SQL> create index fy_2022_idx on fy_2022(id) nologging; Index created.