Oracle makes a distinction between the following two types of local indexes:

•\ Local prefixed indexes: These are indexes whereby the partition keys are on the leading edge of the index definition. For example, if a table is range partitioned on a column named LOAD_DATE, a local prefixed index on that table would have LOAD_DATE as the first column in its column list.

•\ Local nonprefixed indexes: These indexes do not have the partition key on the leading edge of their column list. The index may or may not contain the partition key columns.

Both types of indexes are able to take advantage of partition elimination, both can support uniqueness (as long as the nonprefixed index includes the partition key), and so on. The fact is that a query that uses a local prefixed index will always allow for index partition elimination, whereas a query that uses a local nonprefixed index might not. This is why local nonprefixed indexes are said to be slower by some people—they do not enforce partition elimination (but they do support it).

There is nothing inherently better about a local prefixed index as opposed to a local nonprefixed index when that index is used as the initial path to the table in a query. What I mean is that if the query can start with “scan an index” as the first step, there isn’t much difference between a prefixed and a nonprefixed index.

Partition Elimination Behavior

For the query that starts with an index access, whether or not it can eliminate partitions from consideration all really depends on the predicate in your query. A small example will help demonstrate this. The following code creates a table, PARTITIONED_TABLE, that is range partitioned on a numeric column A such that values less than two will be in partition PART_1 and values less than three will be in partition PART_2:

$ sqlplus eoda/foo@PDB1

SQL> CREATE TABLE partitioned_table( a int,b int,data char(20))PARTITION BY RANGE (a)(PARTITION part_1 VALUES LESS THAN(2) tablespace p1, PARTITION part_2 VALUES LESS THAN(3) tablespace p2);Table created.

We then create both a local prefixed index, LOCAL_PREFIXED, and a local nonprefixed index, LOCAL_NONPREFIXED. Note that the nonprefixed index does not have A on the leading edge of its definition, which is what makes it a nonprefixed index:

SQL> create index local_prefixed on partitioned_table (a,b) local; Index created.

SQL> create index local_nonprefixed on partitioned_table (b) local; Index created.

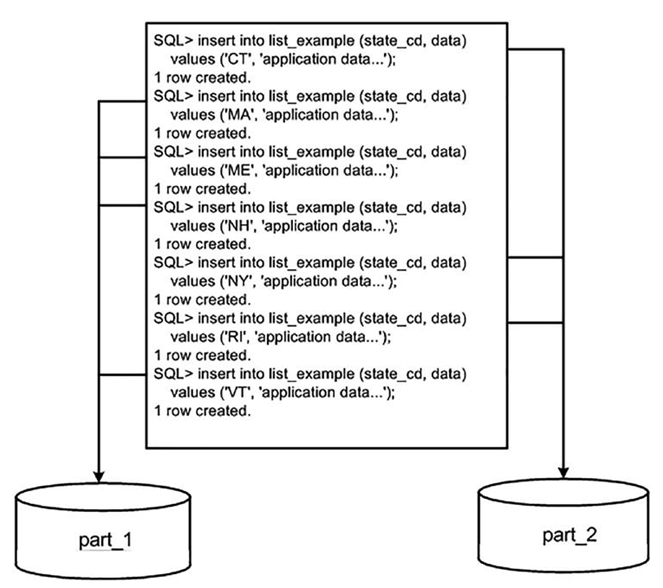

Next, we’ll insert some data into one partition and gather statistics:

SQL> insert into partitioned_table select mod(rownum-1,2)+1, rownum, ‘x’

from dual connect by level <= 70000; 70000 rows created.

We take tablespace P2 offline, which contains the PART_2 partition for both the tables and indexes:

SQL> alter tablespace p2 offline;Tablespace altered.

Taking tablespace P2 offline will prevent Oracle from accessing those specific index partitions. It will be as if we had suffered media failure, causing them to become unavailable. Now we’ll query the table to see what index partitions are needed by different queries. This first query is written to permit the use of the local prefixed index:

SQL> select * from partitioned_table where a = 1 and b = 1;

This query succeeded, and we can see why by reviewing the explain plan. We’ll use the built-in package DBMS_XPLAN to see what partitions this query accesses. The PSTART (partition start) and PSTOP (partition stop) columns in the output show us exactly what partitions this query needs to have online and available in order to succeed:

SQL> explain plan for select * from partitioned_table where a = 1 and b = 1; Explained.

Now access DBMS_XPLAN.DISPLAY and instruct it to show the basic explain plan details plus partitioning information:

SQL> select * from table(dbms_xplan.display(null,null,’BASIC +PARTITION’));

So, the query that uses LOCAL_PREFIXED succeeds. The optimizer was able to exclude PART_2 of LOCAL_PREFIXED from consideration because we specified A=1 in the query, and we can see that clearly in the plan PSTART and PSTOP are both equal to 1. Partition elimination kicked in for us. The second query fails, however:

SQL> select * from partitioned_table where b = 1;

ERROR:

ORA-00376: file 23 cannot be read at this time ORA-01110: data file 23: ‘/opt/oracle/oradata/CDB/ C217E68DF48779E1E0530101007F73B9/datafile/o1_mf_p2_jc8bg9py_.dbf’

And using the same technique, we can see why:

SQL> explain plan for select * from partitioned_table where b = 1; Explained.

SQL> select * from table(dbms_xplan.display(null,null,’BASIC +PARTITION’));

Here, the optimizer was not able to remove PART_2 of LOCAL_NONPREFIXED from consideration—it needed to look in both the PART_1 and PART_2 partitions of the index to see if B=1 was in there. Herein lies a performance issue with local nonprefixed indexes: they do not make you use the partition key in the predicate as a prefixed index does. It is not that prefixed indexes are better; it’s just that in order to use them, you must use a query that allows for partition elimination.

If we drop the LOCAL_PREFIXED index and rerun the original successful query, as follows:

SQL> drop index local_prefixed;Index dropped.

SQL> select * from partitioned_table where a = 1 and b = 1;

It succeeds, but as we’ll see, it used the same index that just a moment ago failed us. The plan shows that Oracle was able to employ partition elimination here—the predicate A=1 was enough information for the database to eliminate index partition PART_2 from consideration:

SQL> explain plan for select * from partitioned_table where a = 1 and b = 1; Explained.

SQL> select * from table(dbms_xplan.display(null,null,’BASIC +PARTITION’));

Note the PSTART and PSTOP column values of 1 and 1. This proves that the optimizer is able to perform partition elimination even for nonprefixed local indexes.

If you frequently query the preceding table with the following queries, then you might consider using a local nonprefixed index on (b,a):

select … from partitioned_table where a = :a and b = :b; select … from partitioned_table where b = :b;

That index would be useful for both of the preceding queries. The local prefixed index on (a,b) would be useful only for the first query.

The bottom line here is that you should not be afraid of nonprefixed indexes or consider them as major performance inhibitors. If you have many queries that could benefit from a nonprefixed index as outlined previously, then you should consider using one. The main concern is to ensure that your queries contain predicates that allow for index partition elimination whenever possible. The use of prefixed local indexes enforces that consideration. The use of nonprefixed indexes does not. Consider also how the index will be used. If it will be used as the first step in a query plan, there are not many differences between the two types of indexes.